The most common cloud data management mistakes

There are many reasons to migrate applications and workloads to the cloud, from scalability to easy maintenance, but moving data is never without risk. When IT systems or applications go down it can prove incredibly costly for businesses. Mistakes are easy to make in the rush to compete. There's a lot that can go wrong, especially if you don't plan properly. Every hour of downtime costs over $100,000 according to 98% of organizations surveyed by ITIC. Through 2022, at least 95% of cloud security failures will be the customer's fault according to Gartner. To avoid being in that group, you need to know the most common pitfalls to avoid. Here are seven mistakes that companies make in their rush to the cloud.

The common practice is for the data in a data center to be comingled and collocated on shared devices with unknown entities. Cloud vendors promise that your data is kept separately, but regulations require that you make sure. One instance is access control because basic cloud file services often fail to provide the same user authentication or granular control as traditional IT systems. The Ponemon Institute puts the average cost of a data breach at $3.6 million. Make sure you have a multi-layered data security and access control strategy to block unauthorized access and ensure your data is safely and securely stored in encrypted form wherever it is.

It's extremely important that your company data is safe at rest or on the move. Make certain that the data is recoverable if disaster hits. Consider the possibilities of corruption, ransomware, accidental deletion, and unrecoverable failures in cloud infrastructure. Plan for a worst case scenario that consists of a coherent and durable data protection strategy. Test your strategy to make sure it fits your budget and IT requirements.

A shared, multi-tenant infrastructure can lead to unpredictable performance and many cloud storage services lack the resources to tune performance parameters. Too many simultaneous requests, network overloads, or equipment failures can lead to latency issues and sluggish performance. Look for a layer of performance control for your data that allows all your applications and users to get the amount of responsiveness that's expected. Ensure that it can easily adapt as demand and budgets grow over time.

Keep storage snapshots and previous versions managed by dedicated NAS appliances rapid recovery from data corruption, deletion or other potentially catastrophic events was possible. But few cloud native storage systems provide snapshotting or offer easy rollback to previous versions, leaving you reliant on current backups. You need flexible, instant storage snapshots that provide rapid recovery and rollback capabilities for business-critical data and applications.

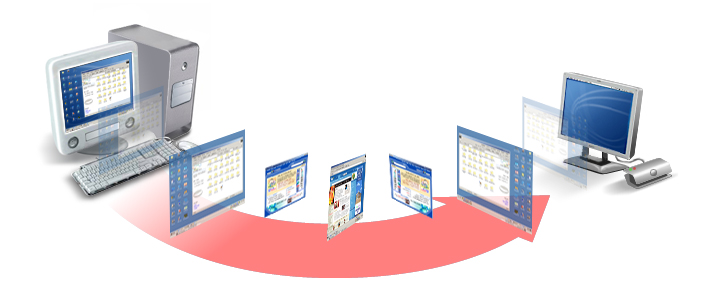

Almost 90% of organizations will move to a hybrid infrastructure by 2020, according to Gartner analysts. There driving force for companies is to optimize efficiency and control costs. You must assess your options and the impact it will have on your business. Consider the ease with which you can switch vendors in the future and any code that may have to be rewritten. Vendors want to trap you in with proprietary APIs and services, but you need to keep your data and applications multi-cloud capable to be flexible and maintain the option of changing cloud providers.